|

Wisdom is not the product of schooling but the lifelong attempt to acquire it. – Albert Einstein

|

Exploiting the Power of High-Functionality Environments (HFEs) with Socio-Technical Support Systems

Gerhard Fischer

Introductory Remarks, Breckenridge Symposium, Sep 30 – Oct 2, 2016

Initial Schedule

Friday

- 1:30 – 3:30 Session 1: Background Information and Personal Experience from each Participants about High-Functionality Environments from each Participation

- 4:00 – 6:00 Session 2: Identification of fundamental challenges based on the Individual Contributions

Saturday

- 8:30 – 10:30 Session 3: Identification and Exploration of Research Challenges of Current and Future High-Functionality Environments

- 11:00 – 1:00 Session 4: Identification and Exploration of Research Challenges of Current and Future High-Functionality Environments

Sunday

- 8:30 – 10:30 Session 5: Design Requirements for Socio-Technical Environments to cope with High-Functionality Environments

- 11:00 – 1:00 Session 6: Summarizing Reflections and Findings from the Symposium

Definition of High-Functionality Environments (HFEs)

—

Using Examples

- Unix à Symbolics à Microsoft-Word

- Domain-Oriented Design Environments

- Software Reuse and Redesign Environments

- McGuckin Hardware Store

- Environments created by Cultures of Participation (Open Source Software Systems, 3D Warehouse, Scratch, Wikipedia)

- 5 Mio Apps on Smartphones

- MOOCs (free learning resources; approx. 4000 courses world-wide)

More Examples of HFEs

- booking a flight

- renting a car

- selecting a telecommunications company

- selecting a retirement plan and investment options

- general characteristics of developments

- choice overload in todays societies à “more is more” (compared to “less is more”)

- DIY (“Do it yourself”) societies: we become our own travel agents, ………… à “with a computer, one person can do everything may look not forward, but back to the stage in social evolution before anyone noticed the advantages of the division of labor” (Brown, J. S. & Duguid, P. (2000) “The Social Life of Information”)

HFEs — Why Do They Exist?

- “Reality is not User-Friendly” (the world is complex; our tools need to match that complexity) — usable versus useful à usable and useful

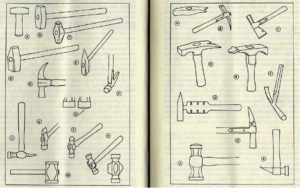

- example: 500 hammers (Basalla, G. (1988) The Evolution of Technology)

My View on Participants’ Involvement with HFEs

- Hal Eden (Rootgroup, Boulder): support for reflection-in-action and making information relevant to the task at hand

- Yunwen Ye (Uber, Louisville, CO): contextualized information delivery in large software repositories

- David Redmiles (UC Irvine, CA): comprehending the information found (the C in the “location/comprehension/modification (LCM) model)

- Jonathan Ostwald (UCAR, Boulder): living organizational memories and their relevance for HFEs

- Brent Reeves (Abilene University, TX): author of the McGuckin case study (a success story coping with a HFEs)

- John Bacus (Trimble, Boulder): Sketch-Up and 3D-Warehouse as case studies for HFEs

- Michael Wright (NCAR, Boulder): the impact of HFEs on scholarly communication and technology-support learning

My View on Participants’ Involvement with HFEs

- Yi (“Oliver”) Wang (Dakota State University): HFEs in a curriculum in a College of Business and Information Systems

- Kumiyo Nakakoji (Kyoto University, Japan): museums as an example for a HFE; impact of specification components to cope with HFEs

- Mark Gross (ATLAS, CU Boulder): Maker spaces as an example for a HFE

- Ricarose Roque (Info Science, CU Boulder): Scratch — an example

- Brian Keegan (Info Science, CU Boulder): peer production and crowdsourcing systems (e.g.: Wikipedia, OpenStreetMap)

- Tamara Sumner (ICS, CU Boulder): communities of practice, tools, and services enabling learning organizations and educational institutions to contextualize and manage HFES and digital content

- Gerhard Fischer (CS, CU Boulder): HFEs: Design Trade-Offs, Promises, and Pitfalls

Socio-Technical Support Systems

to Cope and Exploit the Power of HFES

- automate instead of informate

- automatic transmission in cars

- auto-correct in MS-Word

- have a fixed retirement plan (Germany) rather than offering different options and possibilities (USA)

- distributing activities among communities instead of putting owners of problems in charge

- local developers and power users (Nardi, B. A. (1993) A Small Matter of Programming)

- unself-conscious cultures of design (Alexander, C. (1964) The Synthesis of Form)

- Renaissance Scholars versus Renaissance communities — example: L3D

- support learning on demand (or using on demand) to avoid that people settle on plateaus of suboptimal behavior (main streets versus side streets metaphor)

- active help systems, critiquing systems, ….

- context-aware (user- and task-relevant) information delivery

Computing User- and Task-Relevant Information Delivery

<< transcending the shortcomings of “Did You Know” and “Tip of the Day”>>

Participation Overload

in Cultures of Participation

- check out groceries, check in at airports, arrange travel, take care of banking needs, write and typeset papers, …..

- vote in democracies, determine retirement plans, control energy consumption, contribute ideas and insights to shared information repositories (such as Wikipedia, 3D Warehouse, open source environments), ………………..

- provide feedback about services — e.g.: for hotels, flights, repair shops, support provided via the Internet, ………..

- modify, customize, or create systems in EUD environments

Richard H. Thaler Cass R. Sunstein: “Nudge —Improving Decisions about Health, Wealth, and Happiness”

Core Arguments of “Nudge”

- liberterian paternalism —integrating / synthesizing two notions viewed as being at odds: paternalism and libertarianism

- the libertarian aspect: “people should be free to do what they like —and to opt out of undesirable arrangements if they want to do so”

- the paternalistic aspect: “it is legitimate for choice architects to try to influence people’s behavior in order to make their lives longer, healthier, and better”

- Choice architecture — decisions are influenced by how the choices are presented

- example: retirement savings

- example: organ donations

Situation versus System Models

Identifying the Real Problem

- Customer: I want to get a couple of heaters for a downstairs hallway.

- Sales Agent: What are you doing? What are you trying to heat?

- Customer: I’m trying to heat a downstairs hallway.

- Sales Agent: How high are the ceilings?

- Customer: Normal, about eight feet.

- Sales Agent: Okay, how about these here? (They proceed to agree on two heaters.)

- Customer: Well, the reason it gets so cold is that there’s a staircase at the end of the hallway.

- Sales Agent: Where do the stairs lead?

- Customer: They go up to a landing with a cathedral ceiling.

- Sales Agent: OK, maybe you can just put a door across the stairs, or put a ceiling

fan up to blow the hot air back down.

Understandability and Common Ground

Information Delivery and Intrusiveness

The ‘Right’ Information, at the ‘Right’ Time, in the ‘Right’ Place, in the ‘Right’ Way to the ‘Right’ Person

<<‘right’ is in quotes because in most cases there is no simple ‘right’ or ‘wrong’>>

- the ‘right’ information — requires task modeling (can be inferred from partial constructions in design, from interests derived from previous actions (e.g.: books bought, movies watched) or described via specification components)

- the ‘right’ time — addresses intrusiveness of information delivery (e.g.: when to notify a user about the arrival of a e-mail message, when to critique a user about a problematic design decision); it requires to balance the costs of intrusive interruptions against the loss of context-sensitivity of deferred alerts

……… in the ‘Right’ Place, in the ‘Right’ Way to the ‘Right’ Person

- the ‘right’ place — takes location-based information into account

- the ‘right’ way — differentiates between multi-model representations; e.g.: using multimedia channels to exploit different sensory channels is especially critical for users who may suffer from some disability

- the ‘right’ person — requires user modeling; e.g.: to support personalization

Information Sharing: Access (“Pull”) and / or Delivery (“Push”)

|

|

access (“pull”) |

delivery (“push”) |

|

examples |

browsing, search engines, bookmarks, passive help systems |

Microsoft’s “Tip of the Day”, broadcast systems, critiquing, active help systems |

|

strengths |

non-intrusive, user controlled |

serendipity, creating awareness for relevant information |

|

weaknesses |

task relevant knowledge may remain hidden because users can not specify it in a query |

intrusiveness, too much decontextualized information |

|

major system design challenges |

supporting users in expressing queries, better indexing and searching algorithms |

context awareness (intent recognition, task models, user models, relevance to the task-at-hand) |

Adaptation Mechanism to Control Different Critiquing Rule Sets and Different Intervention Strategies

Some Challenging Research Problems

- identify user goals from low-level interactions

- active help systems

- data detectors

- integrate different modeling techniques

- domain-orientation

- explicit and implicit

- give a user specific problems to solve

- capture the larger (often unarticulated) context and what users are doing (especially beyond the direct interaction with the computer system)

- embedded communication

- ubiquitous computing

- reduce information overload by making information relevant

- to the task at hand

- to the assumed background knowledge of the users

- support differential descriptions (relate new information to information and concepts assumed to be known by the user)

A Comparison between Adaptive and Adaptable Systems

|

|

Adaptive |

Adaptable |

|

Definition |

dynamic adaptation by the system itself to current task and current user |

user changes (with substantial system support) the functionality of the system |

|

Knowledge |

contained in the system; projected in different ways |

knowledge is extended |

|

Strengths |

little (or no) effort by the user; no special knowledge of the user is required |

user is in control; system knowledge will fit better; success model exists |

|

Weaknesses |

user has difficulty developing a coherent model of the system; loss of control; few (if any) success models exist (except humans) |

systems become incompatible; user must do substantial work; complexity is increased (user needs to learn the adaptation component) |

|

Mechanisms Required |

models of users, tasks and dialogs; knowledge base of goals and plans; powerful matching capabilities; incremental update of models |

layered architecture; human problem-domain communication; “back-talk” from the system; design rationale |

|

Application Domains |

active help systems; critiquing systems; differential descriptions; user interface customization |

end-user modifiability, tailorability, filtering, design in use |

Three Levels of Learning on Demand

|

Level |

Description |

Strengths |

Weaknesses |

|

Fix-It Level |

fix the problem by giving a solution without understanding; pure performance support

|

keep focus on task; learning does not delay work |

created no or little understanding |

|

Reflect Level |

explore argumentative context for reflection |

understanding of specific issues |

piecemeal learning of (disconnected) issues

|

|

Contextual Tutoring Level |

provide contextualized tutoring (not lecturing on unrelated issues) |

systematic presentation of a coherent body of knowledge

|

substantial time requirements |

Simplicity and Complexity: A Fundamental Trade-off in Design

Simplicity is not an absolute quality. It depends on people’s experience, knowledge, understanding, and skills. Judging something as complex or simple requires a frame of reference. This presentation will discuss that to model a complex world we need complex systems (“Reality is not user-friendly”). It will describe approaches that we have pursued to cope with high-functionality environments, how to use internal complexity to achieve external simplicity (with layered architectures and by supporting human problem-domain interaction), and it will provide evidence that learning on demand is not a luxury but a necessity to cope and take advantage of complex functionality rich environments.

- Blaise Pascal: “I have made this letter longer than usual, because I lack the time to make it shorter.” — Provincial Letters XVI

- Antoine deSaint-Exupéry (aviator, aircraft designer, author of classic children’s books): “Perfection (in design) is achieved not when there is nothing more to add, but rather when there is nothing more to take away”

- Maeda: “deeming something as complex or simple requires a frame of reference”

Progress in Science and Technology

can not do it à can do it à should it be done?

ê ê

technology ethics, quality of life, values,

impact, choice, control, autonomy

ê

design trade-offs

- for every problem — there is a technological solution (Silicon Valley)

“ICT for Quality of Life: usable à useful à engaging à quality of life

- new professional with a passion for creativity and innovation that want to make a difference in people’s life

- having a desire to look, listen, ask, learn, understand, and empathize with the people for which they create solutions

Othello: Low Threshold à <flow> à High Ceiling

why is this simple in one dimension (the examples captures only one aspect): the game remains the same; the rules governing the games do not change à different for HFEs / information spaces / ecologies in the world of today (using a system breeds new demands)

Some complexity is desirable. When things are too simple, they are also viewed as dull and uneventful. Psychologists have demonstrated that people prefer a middle level of complexity: too simple and we are bored, too complex and we are confused. More- over, the ideal level of complexity is a moving target, because the more expert we become at any subject, the more complexity we prefer.